AI News Bureau

UK AI Safety Institute Unveils AI Safety Testing Platform “Inspect”

The initiative lets startups, academics, AI developers, and international governments assess the specific capabilities of individual AI models and score based on their results.

Written by: CDO Magazine Bureau

Updated 12:42 PM UTC, Thu May 16, 2024

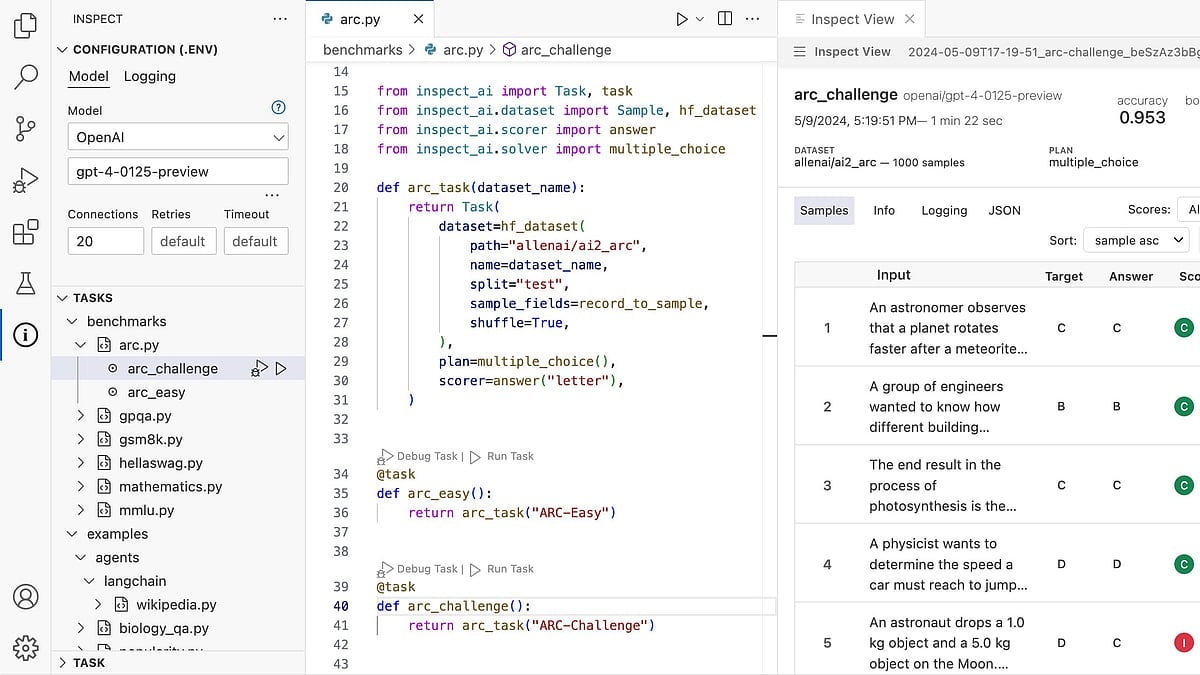

The Inspect platform

The UK AI Safety Institute has launched “Inspect,” an AI safety testing platform overseen by a state-backed body and released for wider use. It is a software library that lets startups, academics, AI developers, and international governments assess the specific capabilities of individual AI models and score based on their results.

“As part of the constant drumbeat of U.K. leadership on AI safety, I have cleared the AI Safety Institute’s testing platform — called Inspect — to be open-sourced,” says Michelle Donelan, the U.K.’s Secretary of State for Science, Innovation, and Technology.

“This puts U.K. ingenuity at the heart of the global effort to make AI safe and cements our position as the world leader in this space,” Donelan adds.

The announcement comes a little more than a month after the British and American governments pledged to work together on safe AI development, agreeing to collaborate on tests for the most advanced AI models.

Further, the two countries also agreed to form alliances with other nations to foster AI safety around the world.

Alongside the launch of Inspect, the AI Safety Institute, Incubator for AI (i.AI), and Number 10 will bring together leading AI talent from a range of areas to test and develop new open-source AI safety tools.